How Midjourney Evolved Over Time (Comparing V1 to V7 Outputs)

Today, Midjourney is one of the best AI image generators available. But it hasn't always been that way. Here's a look at how Midjourney evolved from its early struggles to its polished V7 model now.

John Angelo Yap

Updated September 8, 2025

Different versions of the same AI, generated with Midjourney

Reading Time: 9 minutes

It's hard to believe that just a couple of years ago, AI was treated more as science fiction than reality.

It wasn't until November of 2022 that ChatGPT became publicly available. DALL-E was only accessible to a select few, and only a few companies like DeepMind and OpenAI were invested heavily in deep learning.

One of the earliest mainstream AI products was released early that year too: Midjourney. It now has millions of daily users worldwide. With its latest V7 model, we're witnessing how awesome and terrifying (thanks to DeepFakes) AI art can be for 2025 and for years to come.

Midjourney had a challenging start, to say the least. Now, enough time has passed that we can look back at its improvements over the last 37 months. Here is what Midjourney looked like over three years ago, compared to where it is today:

Midjourney's Evolution Through Images

People who were late in the game never experienced the rough beginnings of Midjourney. There was a time when people questioned if it was really worth pursuing AI image generation because of poor results from both DALL-E and Midjourney. Here are some reminders of how far we've come since then:

Portraits

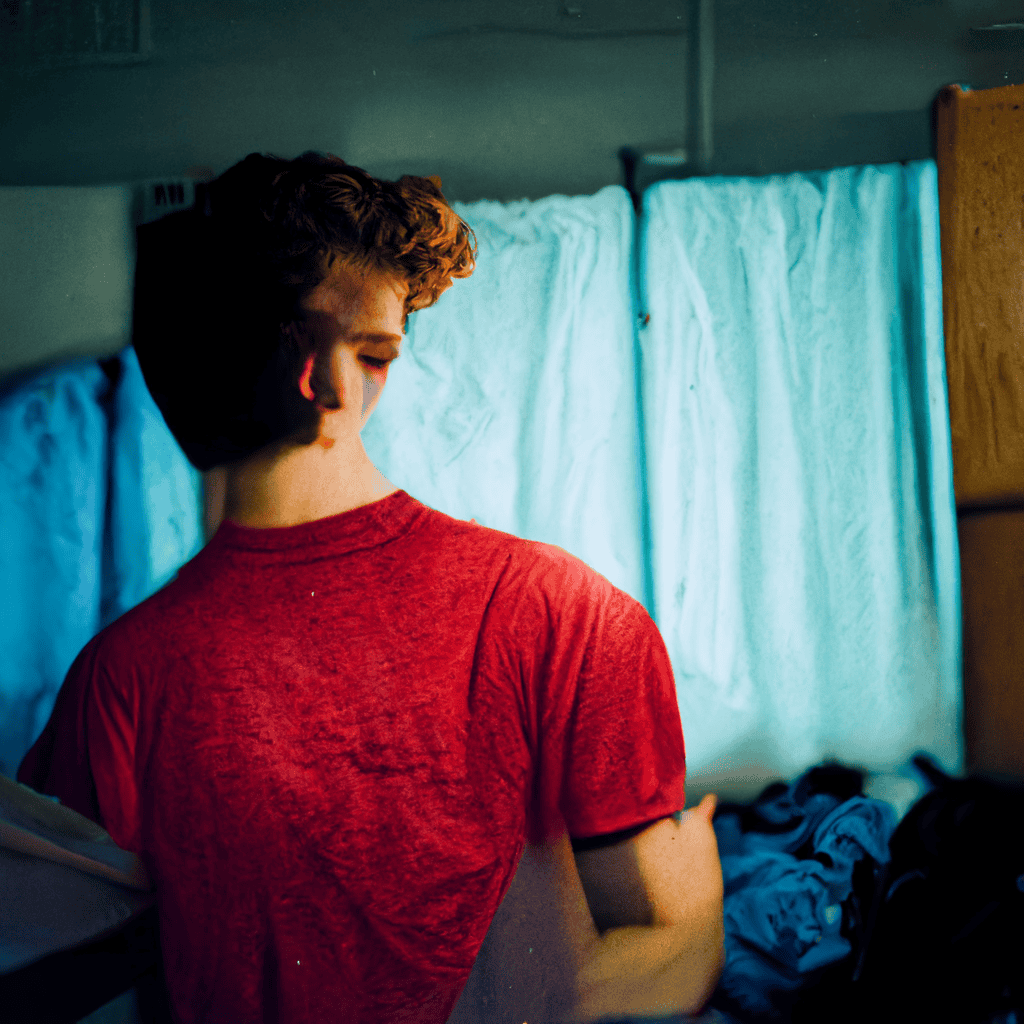

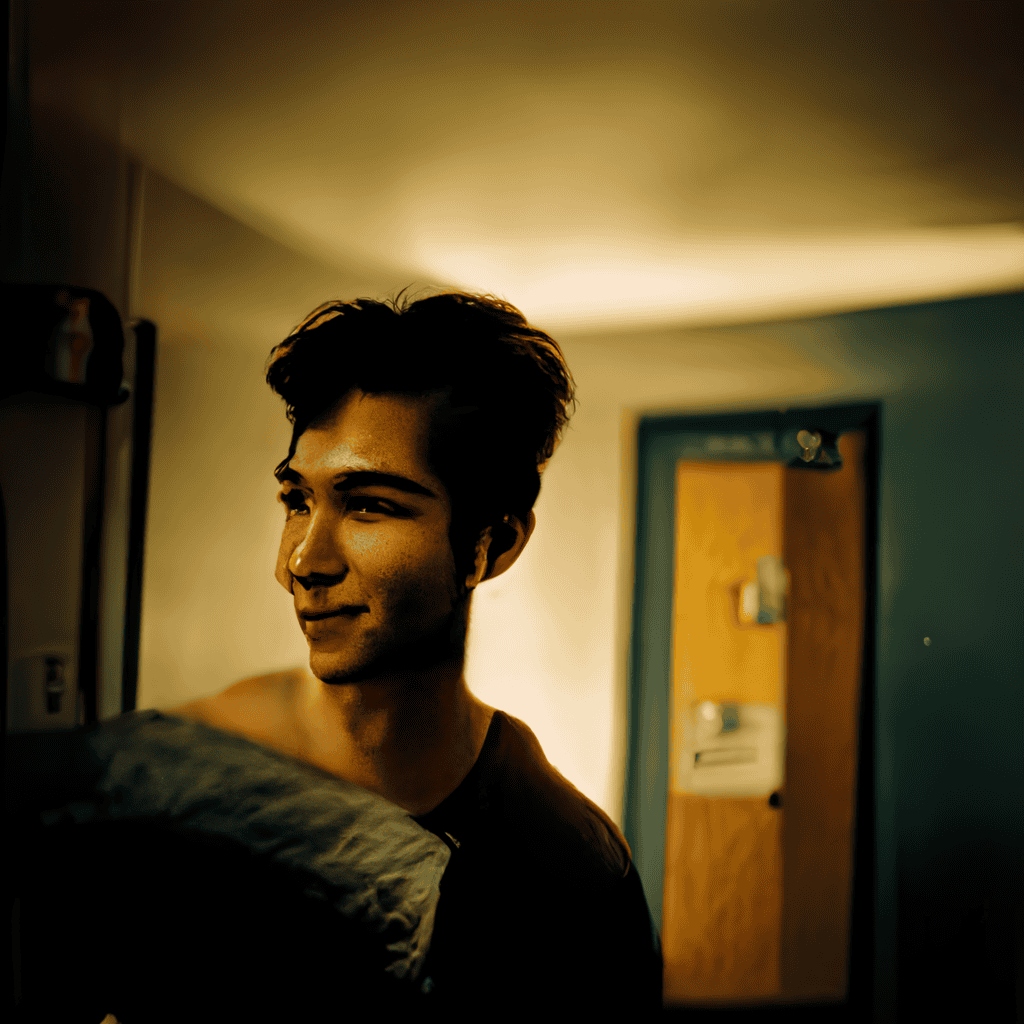

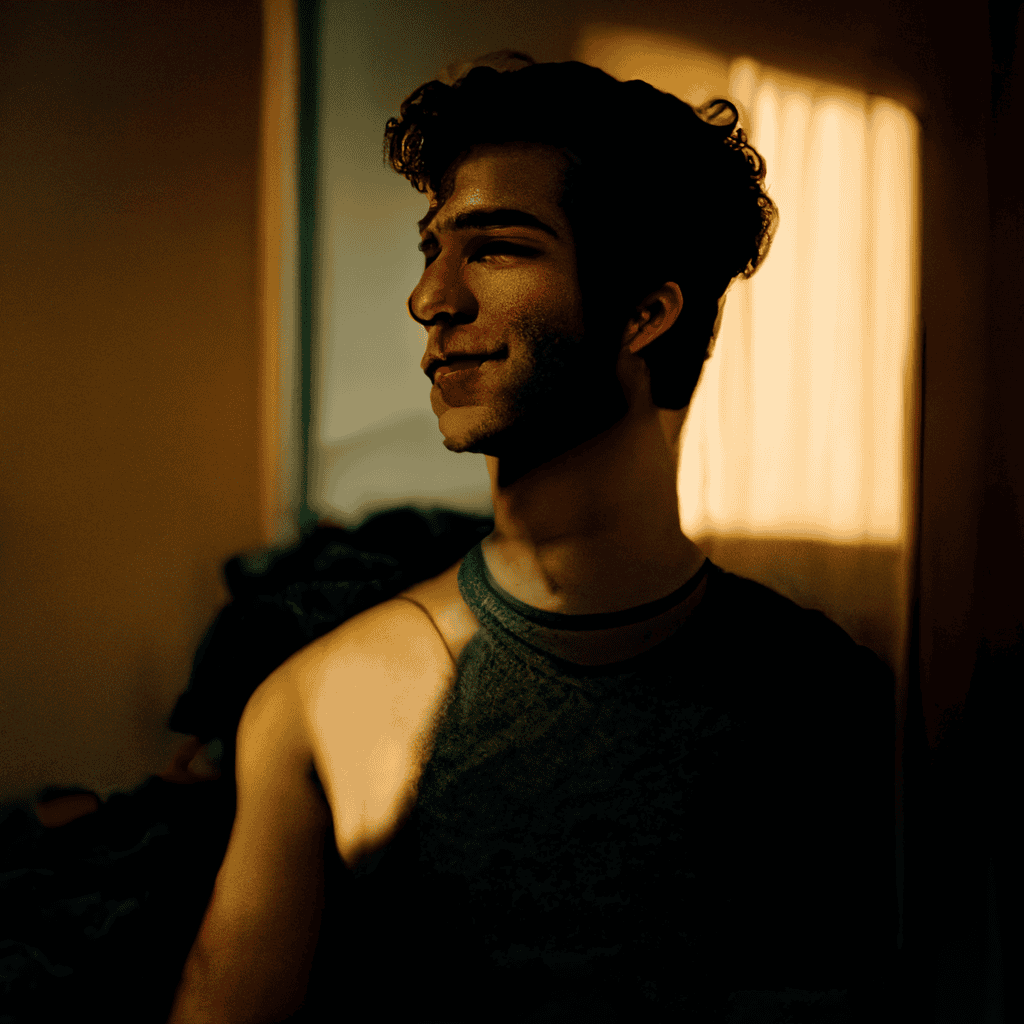

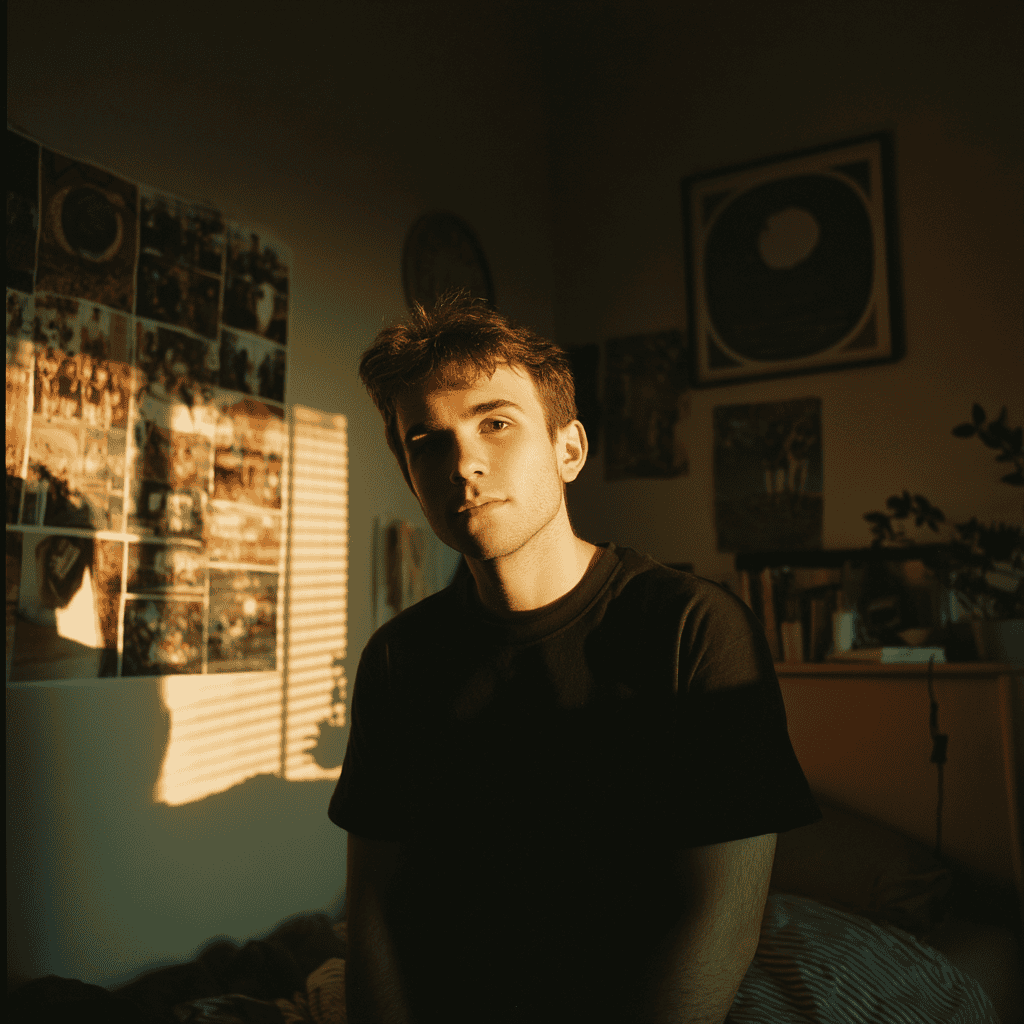

Prompt: a young man in a plain black top, indie, retro, medium format photography, warm light, dorm room aesthetics, candid

The early days of Midjourney weren’t exactly pretty.

Back in V1 through V3, you could sort of tell it was aiming for a human figure, but the execution was a disaster. Faces looked like lumpy potatoes mashed into awkward angles, and the dorm room backgrounds here felt more like abstract art than anything remotely livable.

V4 was the first real turning point. At least humans looked like humans now. The weird limbs and broken proportions were gone, but the images still carried that unsettling something’s not right vibe that makes your brain twitch without knowing why.

By V5, the anatomy had mostly stabilized, but the style leaned too far in the other direction. Instead of casual shots, you ended up with what looked like staged photoshoots. V6 pushed the realism further, but lighting and shadows still betrayed the illusion.

And then V7 landed. Suddenly, it felt like someone had just pulled out their phone and snapped a quick dorm picture. Natural posture, casual angles, realistic lighting — it finally looked like the everyday scene I’d been asking for all along.

Landscape

Prompt: the view from the peak of a mountain, sea of clouds, vast and mesmerizing landscape, Photography, captured with a Fujifilm GFX 100S medium format camera

The early Midjourney landscapes were rough. V1 through V3 couldn’t even tell mountains from clouds — they just melted together into a surreal, scale-less mess that felt more fever dream than mountain view.

V4 got closer but still fumbled perspective, even tossing in a random human I never asked for. By V5, though, things finally clicked: mountains looked like mountains, clouds stayed clouds, and the scene actually made sense.

V6 and V7? A whole different league. The light, the textures, the drama — it looked like pro-level landscape photography. Honestly, the gap between the two was so small it feels like MidJourney may have hit its peak in this category.

Animal Photography

Prompt: grey british shorthair cat, medium shot, grainy disposable

If you want nightmare fuel, just look at what V1 did with a cat prompt. The thing looked less like a pet and more like a fur-covered tumor — pure horror show.

V2 and V3 cleaned it up slightly, but the eyes were still haunting, and overall it felt like the AI had never seen a cat in its life.

V4 and V5 finally delivered something recognizable, though the fur blended in that plasticky AI way, missing the texture of real strands.

V6, though, nailed it. The fur looked natural, the texture felt believable — like a grainy disposable camera shot. Strangely enough, I’d even argue V6 beat V7 here. The latest version stumbled with weird lighting and a torn-up patch near the ear.

Product Photography

Prompt: commercial photography, a scented candle, on pastel purple background, with flowers, minimal, dreamy, soft lighting, center composition

Early Midjourney had no clue what a candle was supposed to look like. V1 and V2 spat out weird abstract blobs — nothing close to a candle, let alone a usable one.

V3 figured out the basic shape, but it still screamed “AI”: flat light, odd textures, zero realism. V4 produced something recognizable but painfully boring, with flower details that looked like doodles. V5 sharpened things up but still lacked that polished, product-photography vibe.

Then came V6 and V7 — and suddenly it was a whole new level. Perfectly centered candles, soft warm lighting, flowers that actually looked real. These versions didn’t just make candles; they made catalog-ready shots that could fool anyone into thinking they came straight out of a studio.

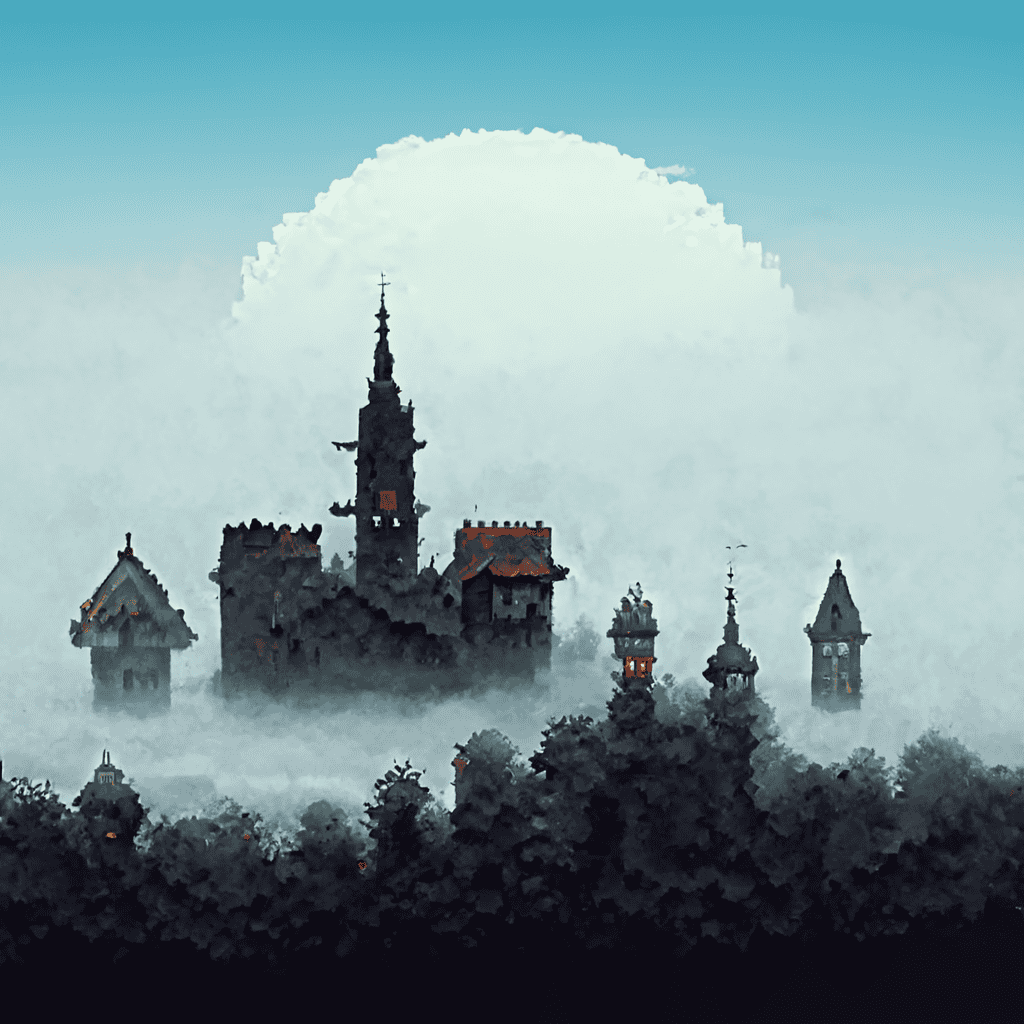

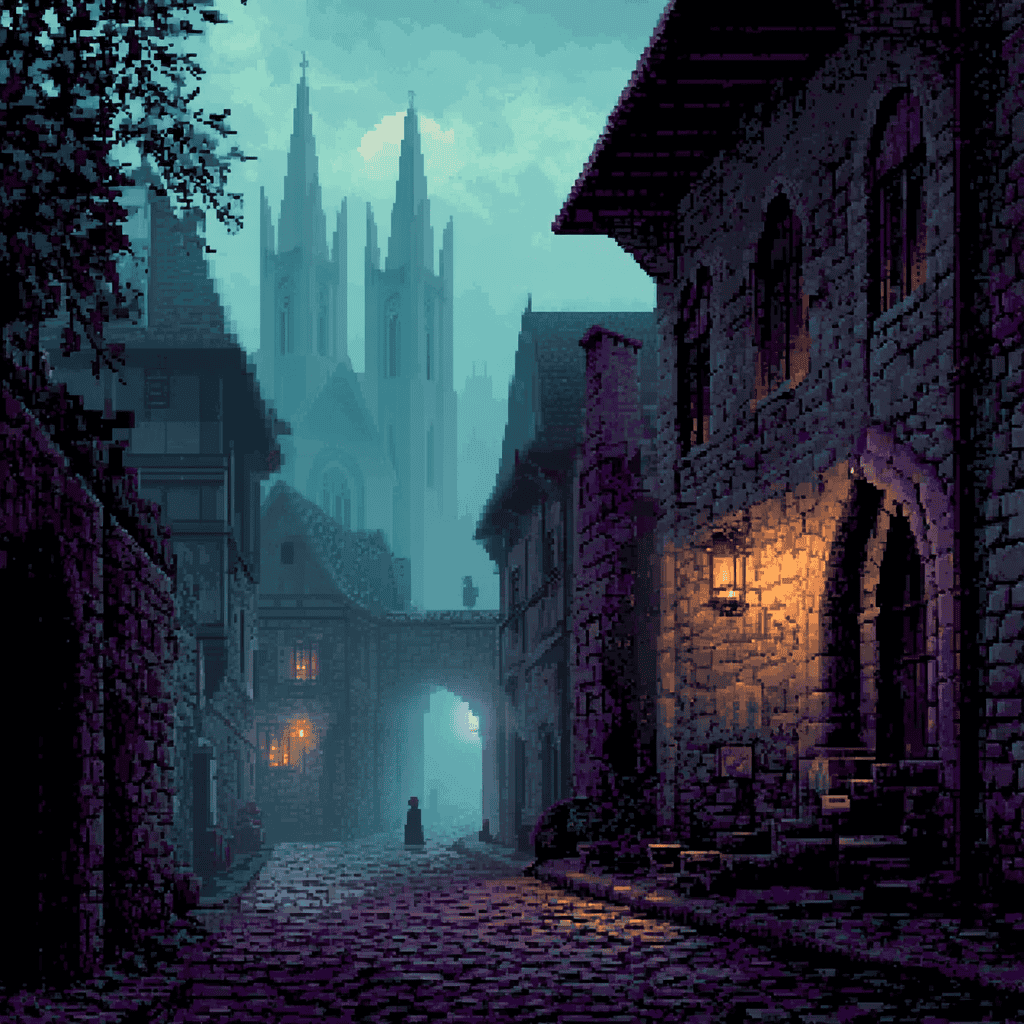

Pixel Art

Prompt: pixel art scene, a mythical medieval town with fog, dark fantasy, 8-bit game

V1 through V3 were, let’s be honest, awful — but they still surprised me. Even that early on, the AI managed to piece together crude medieval towers, which is more than it could say for some other categories. Rough? Yes. Recognizable? Barely. But the seeds were there.

V4, ironically, might be the peak. It nailed the chunky blocks, limited palette, and authentic look that actually felt like pixel art.

By V5, V6, and V7, the “improvements” worked against the prompt. The images were detailed, glossy, and full of gradients—more like an HD imitation of pixel art than the real deal. Impressive, sure, but missing the charm of the medium.

Illustrations

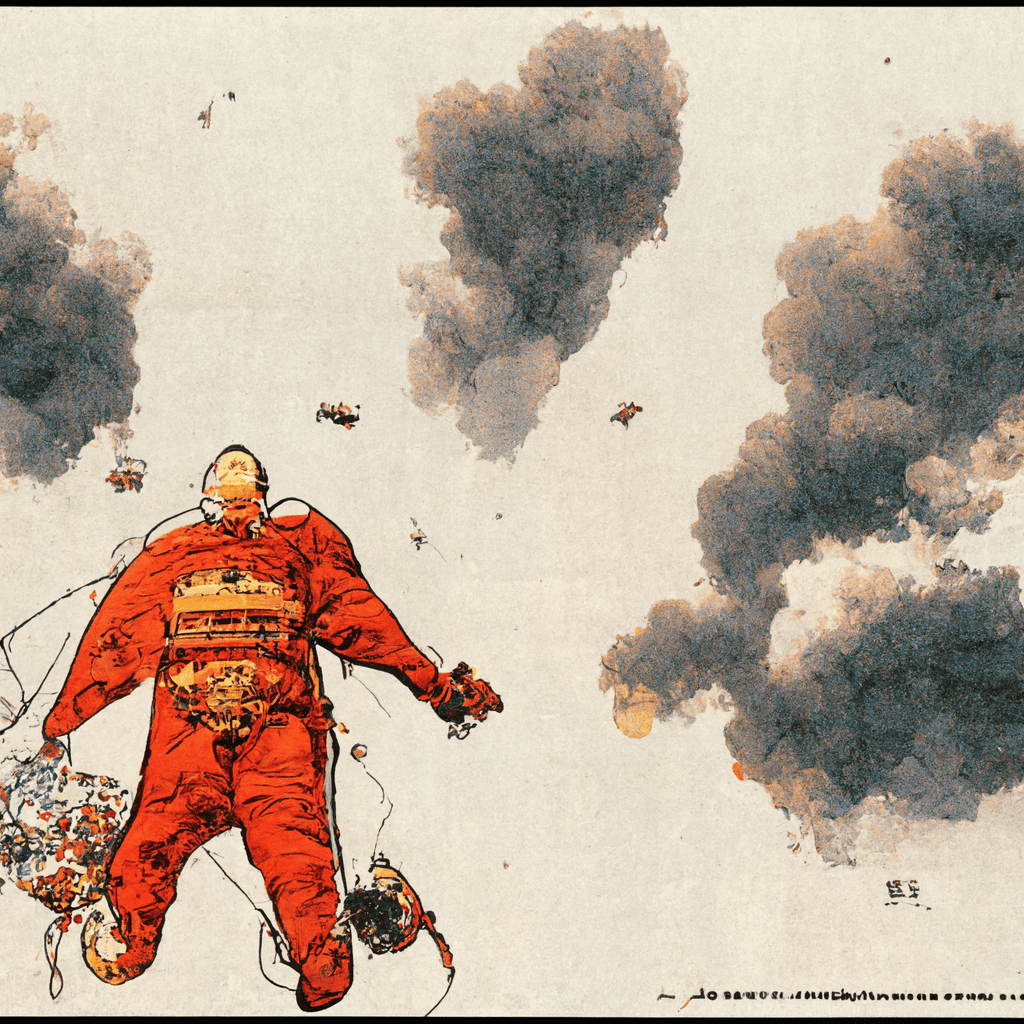

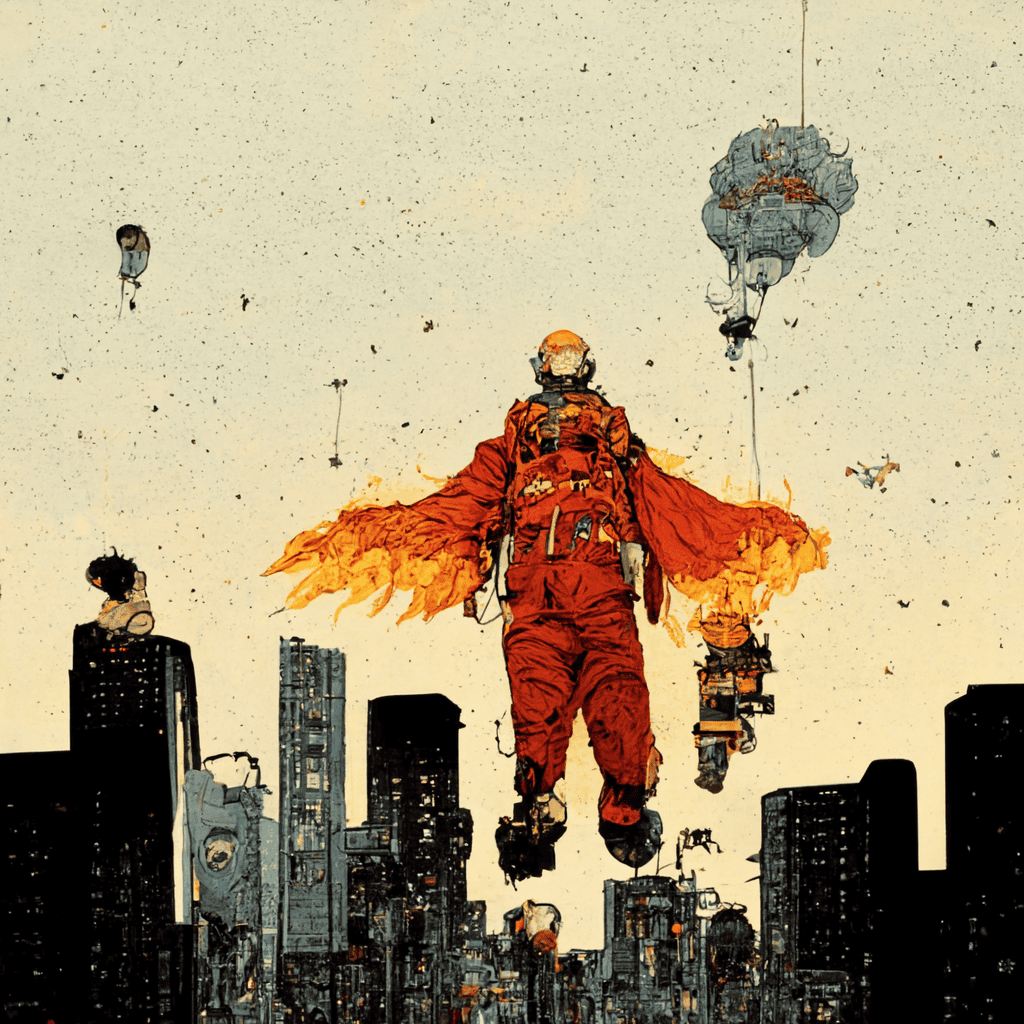

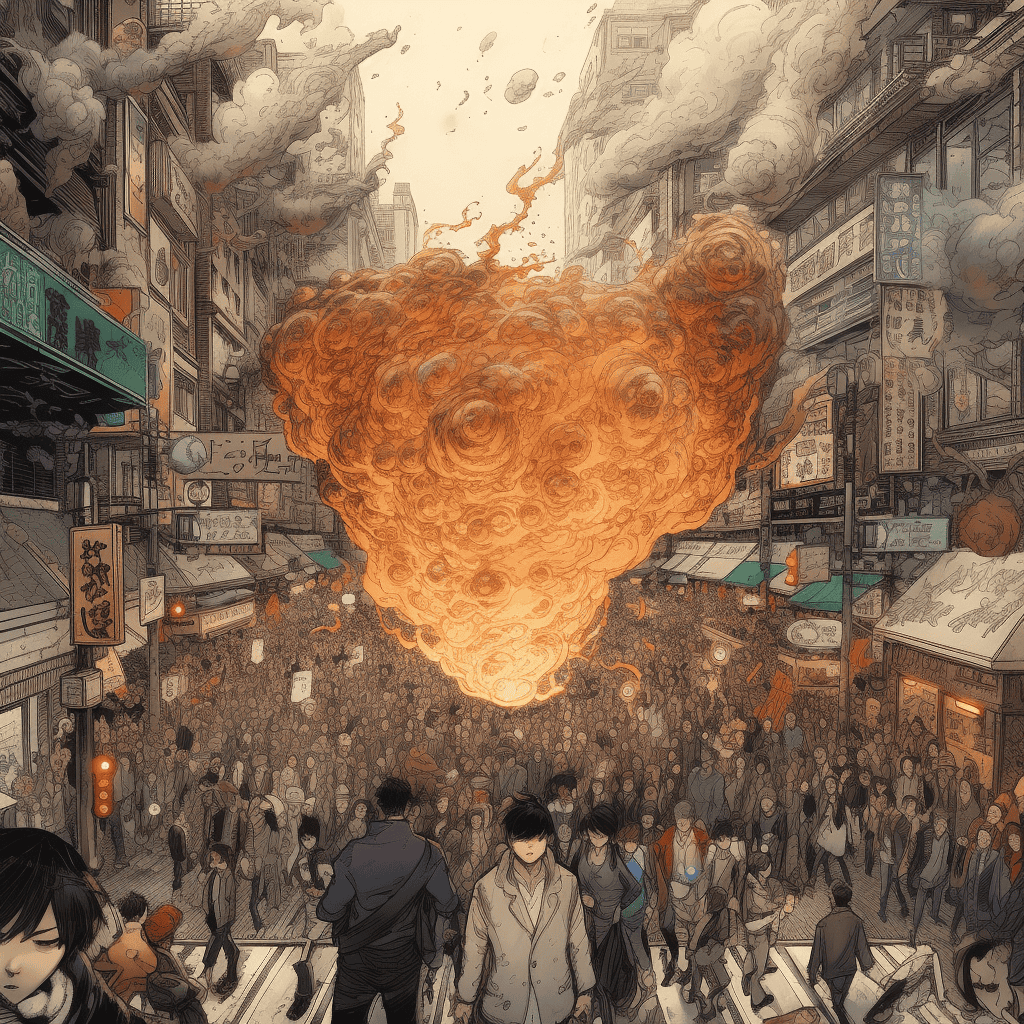

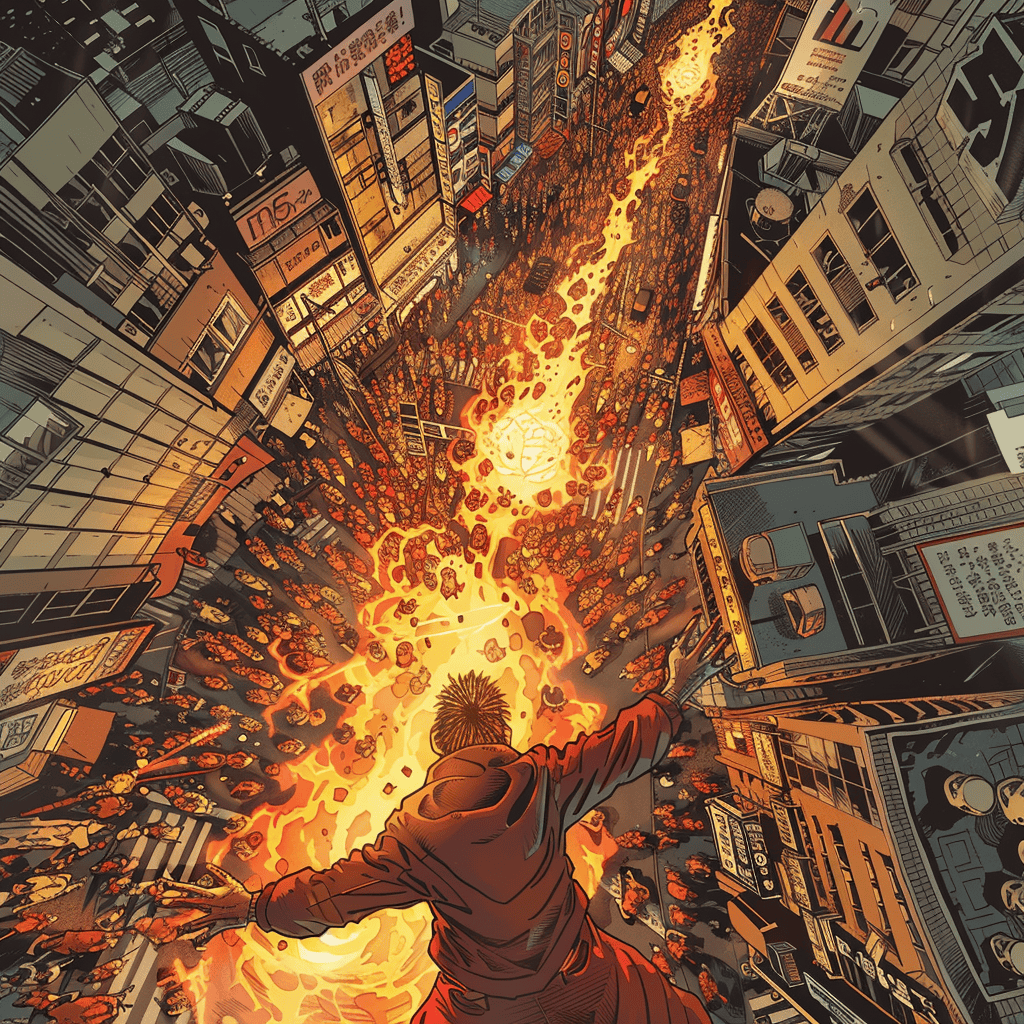

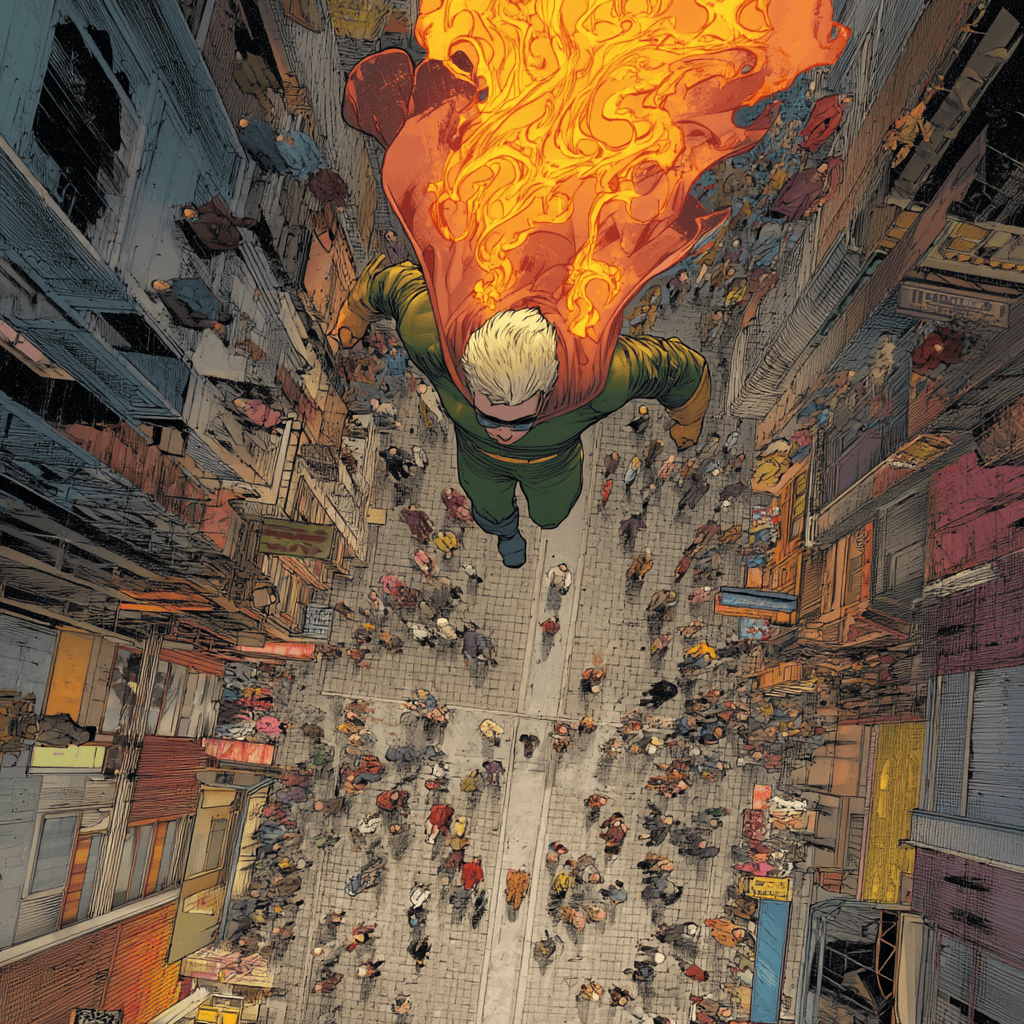

Prompt: a costumed supervillain with fire powers looking down a street full of people while flying, overhead perspective, graphic novel illustration style of katsuhiro otomo, comic book style, jim lee, brian michael bendis

V1 through V3 only got one thing right: the word “supervillain.” Everything else? Gone. No backgrounds, no real perspective, and the characters themselves looked like melted action figures instead of actual antagonists.

V4 and V5 were better, but still way off. V4 kept the villain grounded when the prompt clearly said flying, while V5 gave me a weird blob of fire hovering over a crowd—no costume, no identity, no menace. The angles felt wrong, the perspective sloppy, and the comic book style inconsistent at best.

Then V6 and V7 changed everything. Suddenly the prompt clicked: a flying villain with fire powers, seen from above, terrorizing a crowded street. The panels looked straight out of a professional comic—dynamic poses, dramatic lighting, and that perfect mix of stylization and realism. For once, it felt like Midjourney understood not just the words but the intent behind them.

Some Observations

After going through all these images, I've come to the conclusion that each Midjourney model must have focused on a few aspects every time they've upgraded after V3. To be more specific:

- V4: Prompt cohesion and output structure. Figuring out how to put shapes and ideas together to create a coherent image.

- V5: Once they've figured out how to create coherent images, they improved the generator's overall creativity.

- V6: This is one of their biggest updates so far, with significant improvements on realism, text generation, and understanding.

- V6.1: Just a small upgrade from V6, if any. It’s better at realism than the base model, but it somehow got worse at certain aspects.

- V7: Breakthrough in consistency, faces, hands, and lighting — plus new platform features.

What’s New with V7?

Since last year, Midjourney has been rolling out updates that make the whole platform feel less like a text-to-image generator and more like a full creative suite.

The big headline is, of course, V7 itself, which became the default model in mid-2025. It’s sharper, faster, and far more accurate with prompts, even short ones. Hands and faces (the eternal AI struggle) look more realistic now, textures feel richer, and characters actually stay consistent across multiple renders.

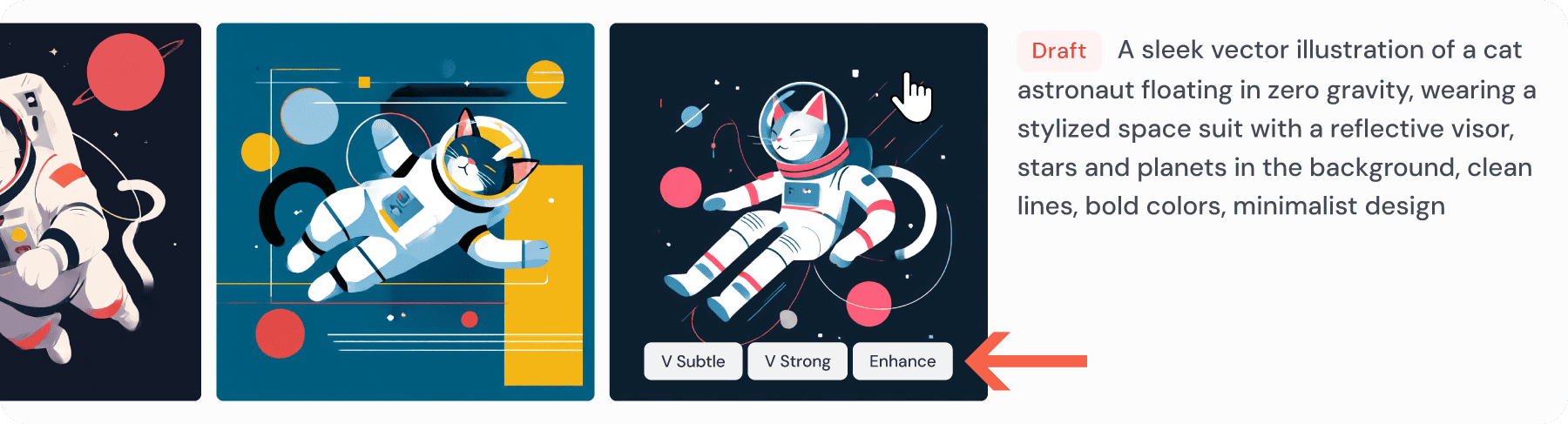

But the features don’t end there. Midjourney now has a Draft Mode, which spits out lower-quality test images in seconds at half the cost—perfect for experimenting before committing credits to the “real” version.

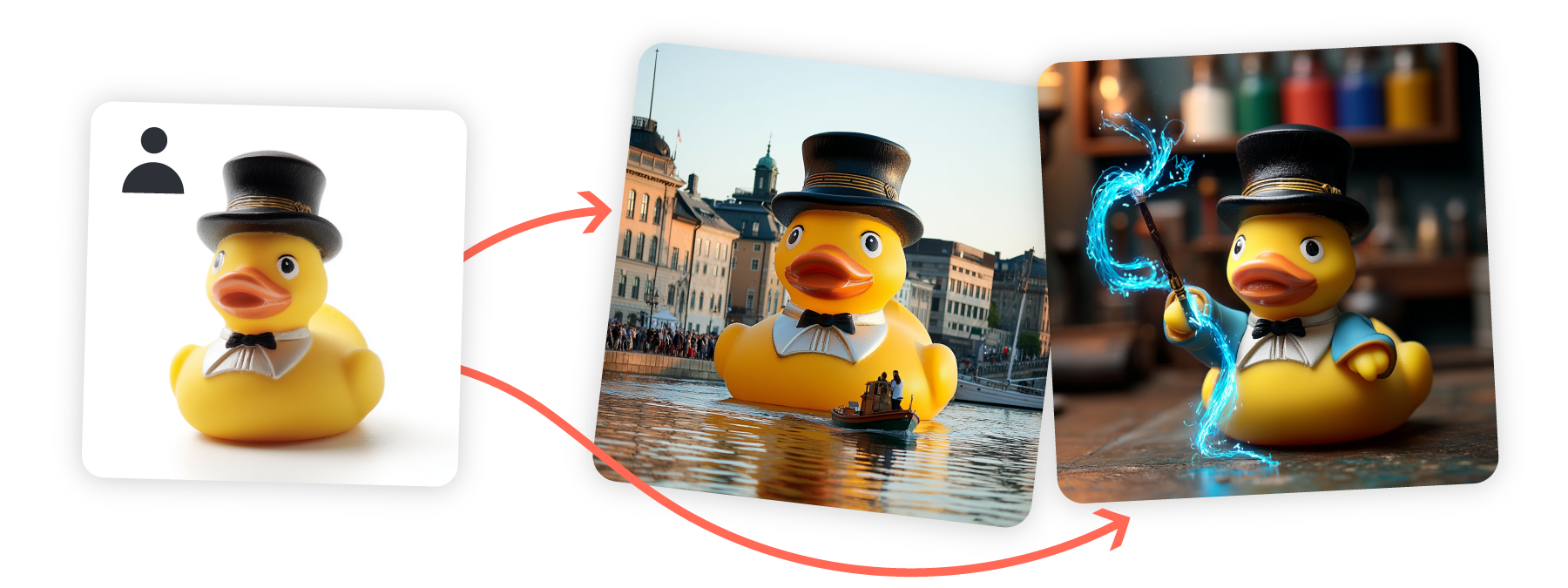

There’s also Omni Reference (--oref), a real help for keeping characters or objects consistent across multiple generations. And if you’ve ever wanted the model to “learn” your aesthetic? V7 introduces personalization by default, letting you train it to your tastes just by rating images.

Then there’s the biggest leap: video generation. With new motion prompts and editing controls, you can animate your art directly inside Midjourney. It’s no longer just about still images—you can literally tell a story in motion. Add in the new layered image editor, smart selection tools, and even retexturing options, and it’s clear that Midjourney is aiming to be more than a generator—it’s becoming a full design environment.

On top of that, you’ve now got Conversational Mode and Voice Input for a smoother prompting process, plus experimental features like the Aesthetic Parameter for fine-tuning vibe, and HD Mode for high-definition video.

All of this makes Midjourney feel less like a “cool AI toy” and more like a serious creative platform. The core model is better than ever—but it’s the surrounding tools that really show how far the platform has come in just a year.

The Bottom Line

Looking back, it’s wild to see how far Midjourney has come. From the nightmare-fuel cats and potato-headed humans of V1 to the professional-grade photography and cinematic scenes of V7, the evolution feels less like an upgrade and more like a complete reinvention.

Each version fixed something big — whether it was anatomy, lighting, or consistency — and by the time we hit V7, the model wasn’t just “good at AI art,” it was producing images that could fool you into thinking they were real photos or panels straight out of a comic book.

And with the newest features layered on — video generation, personalization, Draft Mode — it’s clear Midjourney isn’t slowing down. If anything, it’s edging closer to being a full creative suite rather than just a text-to-image generator.

The jump from V1 to V7 shows more than technical progress: it shows how quickly AI art has matured. And if this is what Midjourney can do in just a few short years, V8 and beyond might blur the line between human-made and AI-made art even further.

Want to Learn Even More?

If you enjoyed this article, subscribe to our free newsletter where we share tips & tricks on how to use tech & AI to grow and optimize your business, career, and life.