TruthScan vs. DALL-E Images - TruthScan AI Image Detector

Outdated or not, DALL-E’s images still float around online. TruthScan’s accuracy shows it’s more than ready to call them out.

John Angelo Yap

Updated August 15, 2025

A policeman chasing after a robot painter, generated with GPT-4

Reading Time: 5 minutes

Not that long ago, AI image generators had a certain… look. The eyes were too glossy. The hands were a mess. The backgrounds looked like someone had copied and pasted random chunks of Google Images. You could spot them instantly.

Then along came models like OpenAI’s DALL-E, and for a while, it changed the conversation. Suddenly, AI images weren’t just novelties — they were clean, high-res, contextual, and sometimes even good enough to fool people.

But here’s the thing: in 2025, DALL-E isn’t the top dog anymore. It was a big leap at the time, but newer models like GPT-4o and Midjourney v7 have pushed the bar so high that DALL-E now feels like the “good for its time” chapter in the history book.

That’s where TruthScan comes in. It’s not here to make art. It’s here to call it out — whether that art came from a cutting-edge model or one that’s a couple of generations old. In this article, we’re putting TruthScan and DALL-E side by side.

What is TruthScan?

TruthScan is basically a reality filter for the internet. Its job is simple on paper: figure out if what you’re looking at is authentic or AI-generated. In practice, that’s a lot harder than it sounds — especially now that image models are aiming for near-perfect realism.

Here’s what TruthScan brings to the table:

- AI Image Detection – It claims 99%+ accuracy on spotting AI-generated images, including outputs from DALL·E, Midjourney, Stable Diffusion, and the new wave of GPT-based image tools.

- Deepfake Detection – Whether it’s a celebrity “saying” something they never did or a face swap meant to mislead, TruthScan looks for subtle facial anomalies, texture mismatches, and unnatural transitions.

- Manipulation Analysis – Finds signs of object removal, background replacement, or compositing work — the kind of changes that make an image misleading without being fully synthetic.

- Bulk Processing – For platforms that need to screen thousands of images per day, TruthScan can scan in batches or run in real time through an API.

The whole point is to catch the fake before it has a chance to spread.

What is DALL-E?

DALL-E was OpenAI’s big swing at image generation before GPT-4o came along. When it launched, it was genuinely groundbreaking — it could take a simple text prompt and generate something visually coherent, often surprisingly creative.

Notable features in its prime:

- Multiple styles – Photorealism, digital painting, 3D render, sketch art.

- Prompt flexibility – You could be vague or hyper-specific, and it would usually give you something usable.

- Inpainting – Change part of an image without regenerating the whole thing.

- High resolution – Images that were good enough for web use without obvious compression artifacts.

But in 2025, DALL-E is showing its age. The textures aren’t as sharp. The lighting often feels artificial. And once you’ve seen what newer models can do with detail, composition, and realism, DALL-E’s outputs start to look… safe and outdated. Like stock photos that never quite make it past the “close enough” stage.

Where DALL-E Shows Its Age

Put a DALL-E image next to one from GPT-4o or Midjourney v7, and the differences jump out:

- Texture realism – Newer models get the micro-details right. DALL-E often flattens them.

- Lighting physics – Shadows and reflections in newer tools feel physically accurate. DALL-E’s can feel staged.

- Complex interactions – A hand holding a glass of water in GPT-4o will look correct from every angle. In DALL-E, it might look fine until you realize the glass is missing a shadow.

- Background depth – Newer tools create layered, atmospheric backgrounds. DALL-E sometimes feels like a cutout in front of a wallpaper.

These “tells” are exactly what a tool like TruthScan can lock onto — the digital equivalent of spotting a forged signature.

TruthScan vs. DALL-E: Accuracy Results

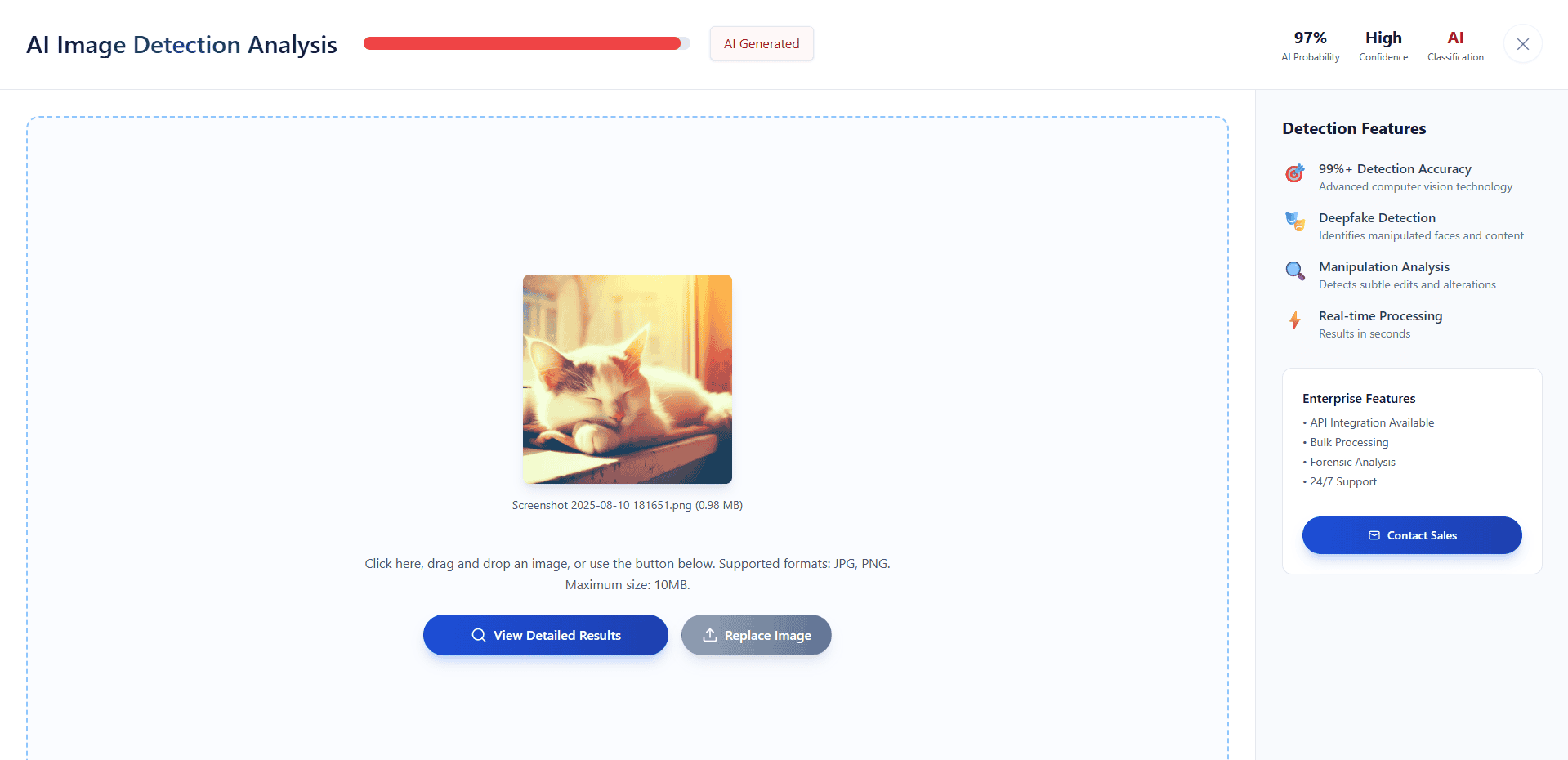

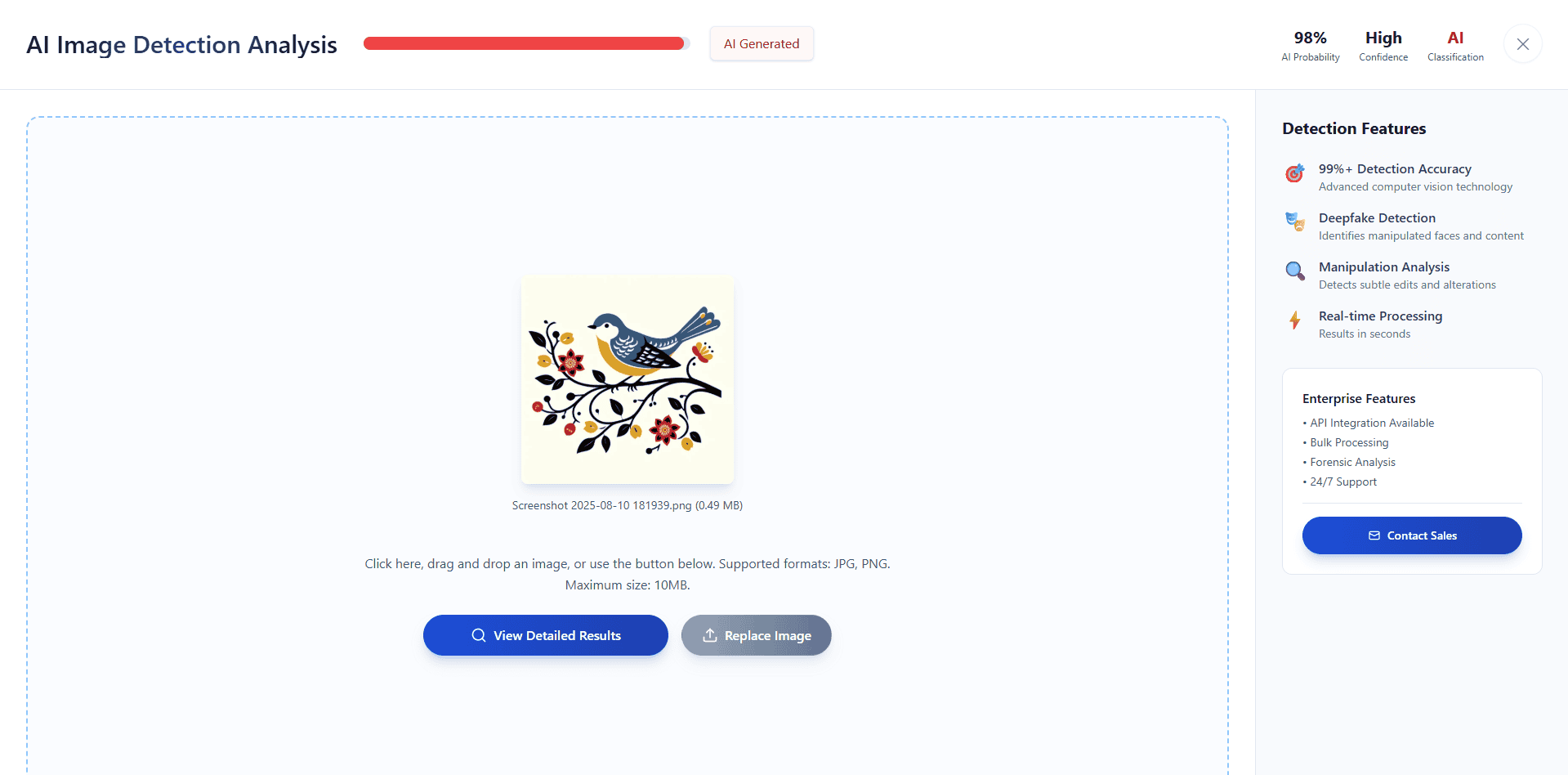

Test #1

Truthscan: Correctly classified image as AI-generated.

AI Likelihood Score: 97%

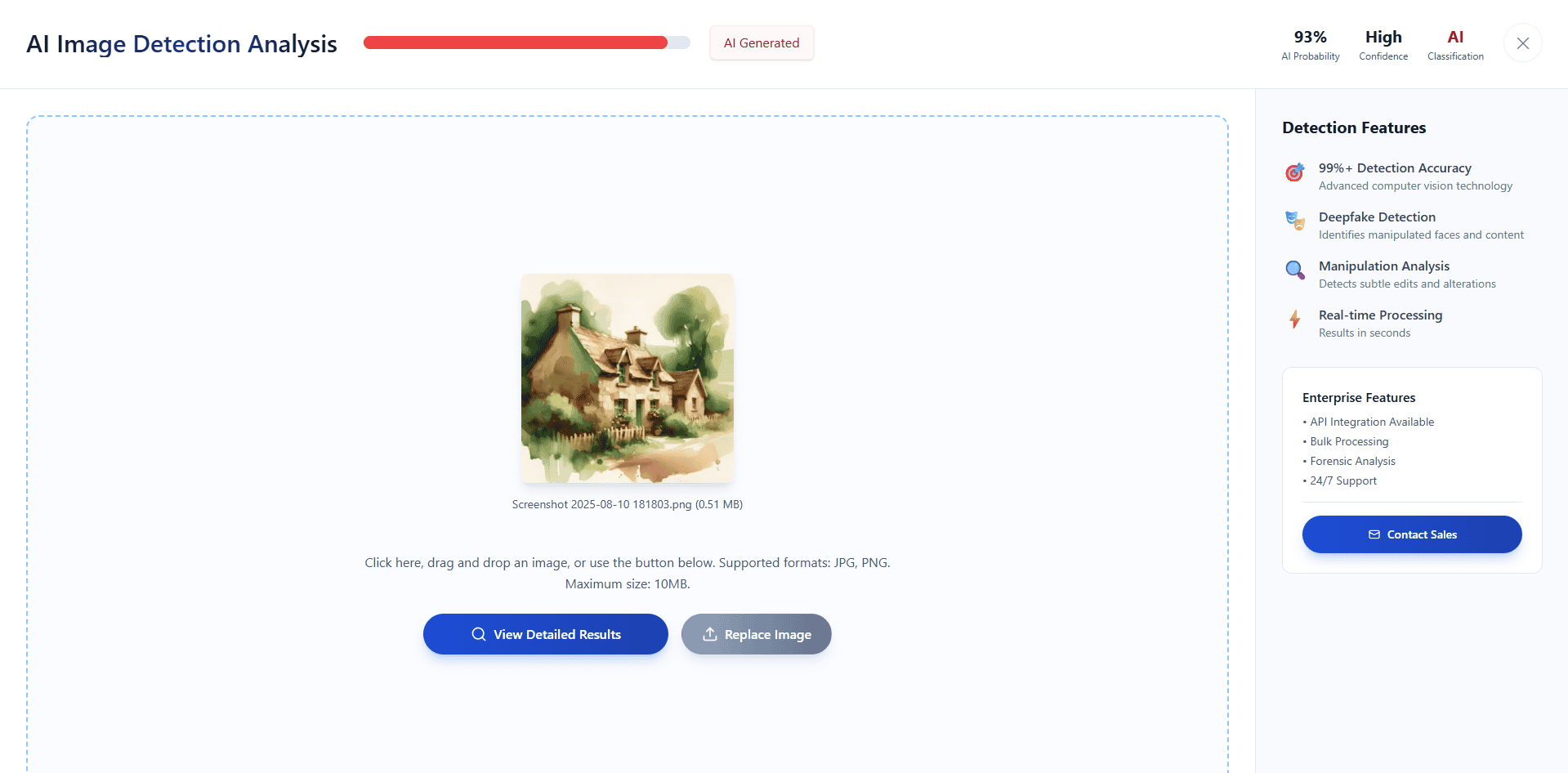

Test #2

Truthscan: Correctly classified image as AI-generated.

AI Likelihood Score: 93%

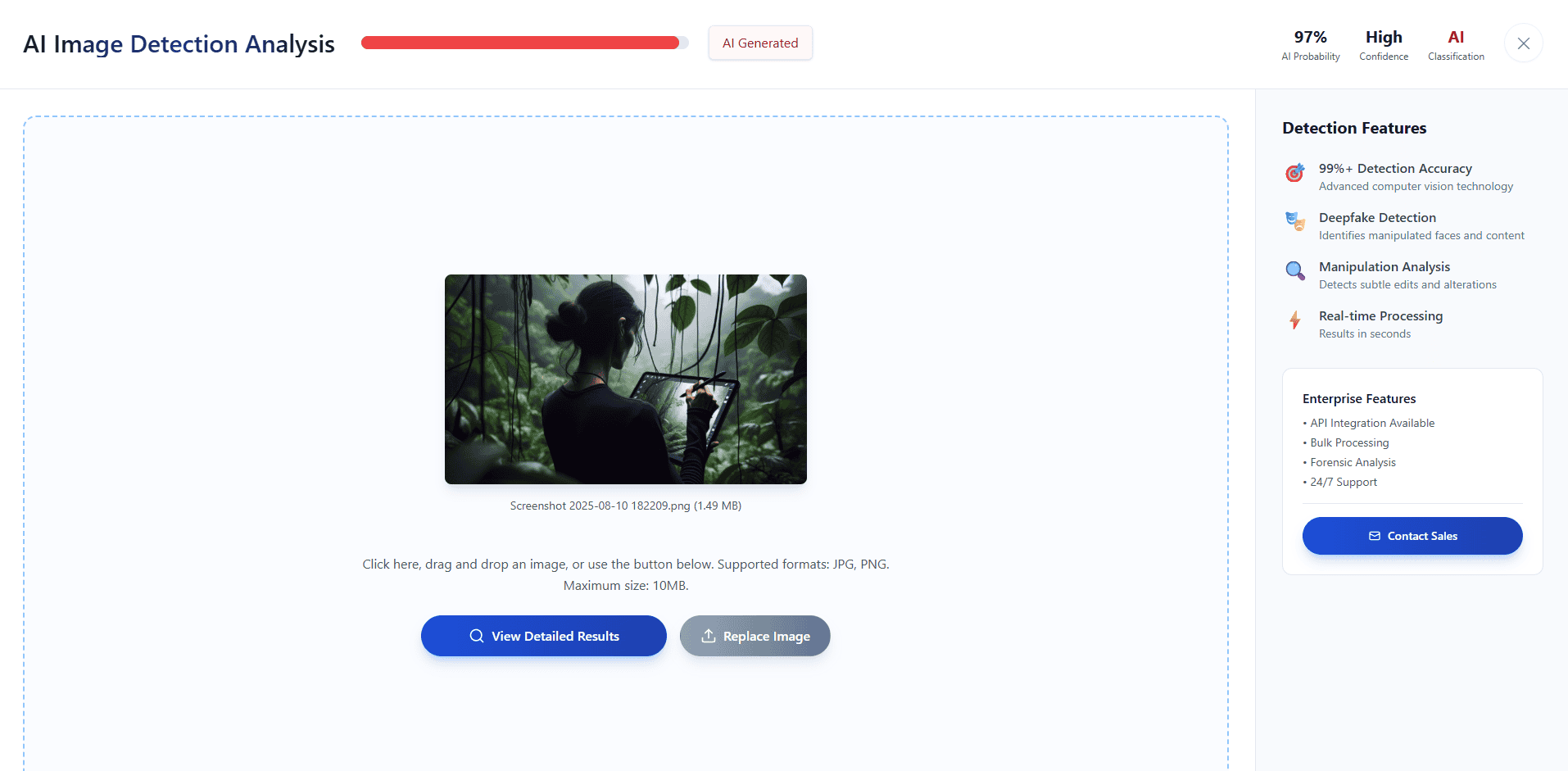

Test #3

Truthscan: Correctly classified image as AI-generated.

AI Likelihood Score: 98%

Test #4

Truthscan: Correctly classified image as AI-generated.

AI Likelihood Score: 97%

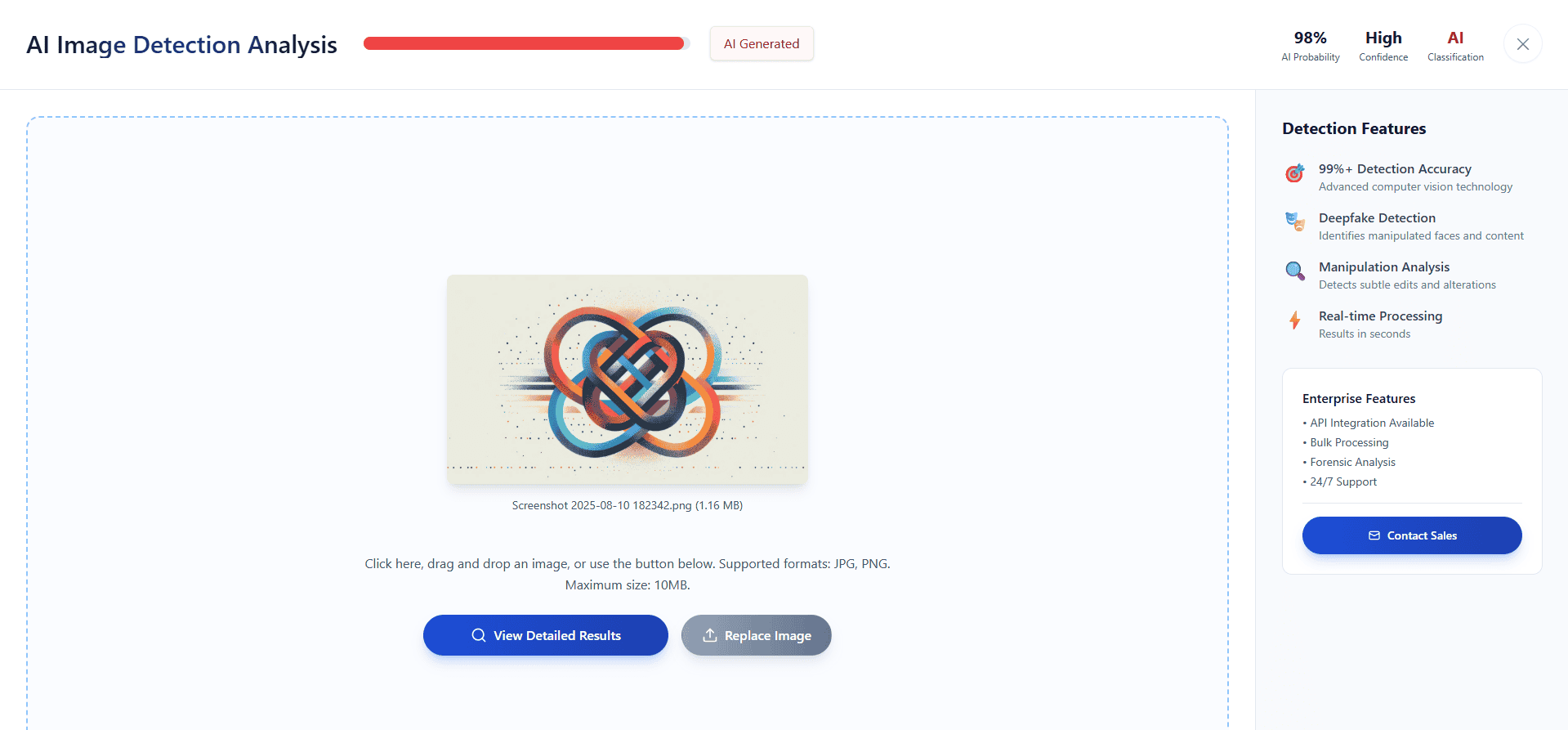

Test #5

Truthscan: Correctly classified image as AI-generated.

AI Likelihood Score: 98%

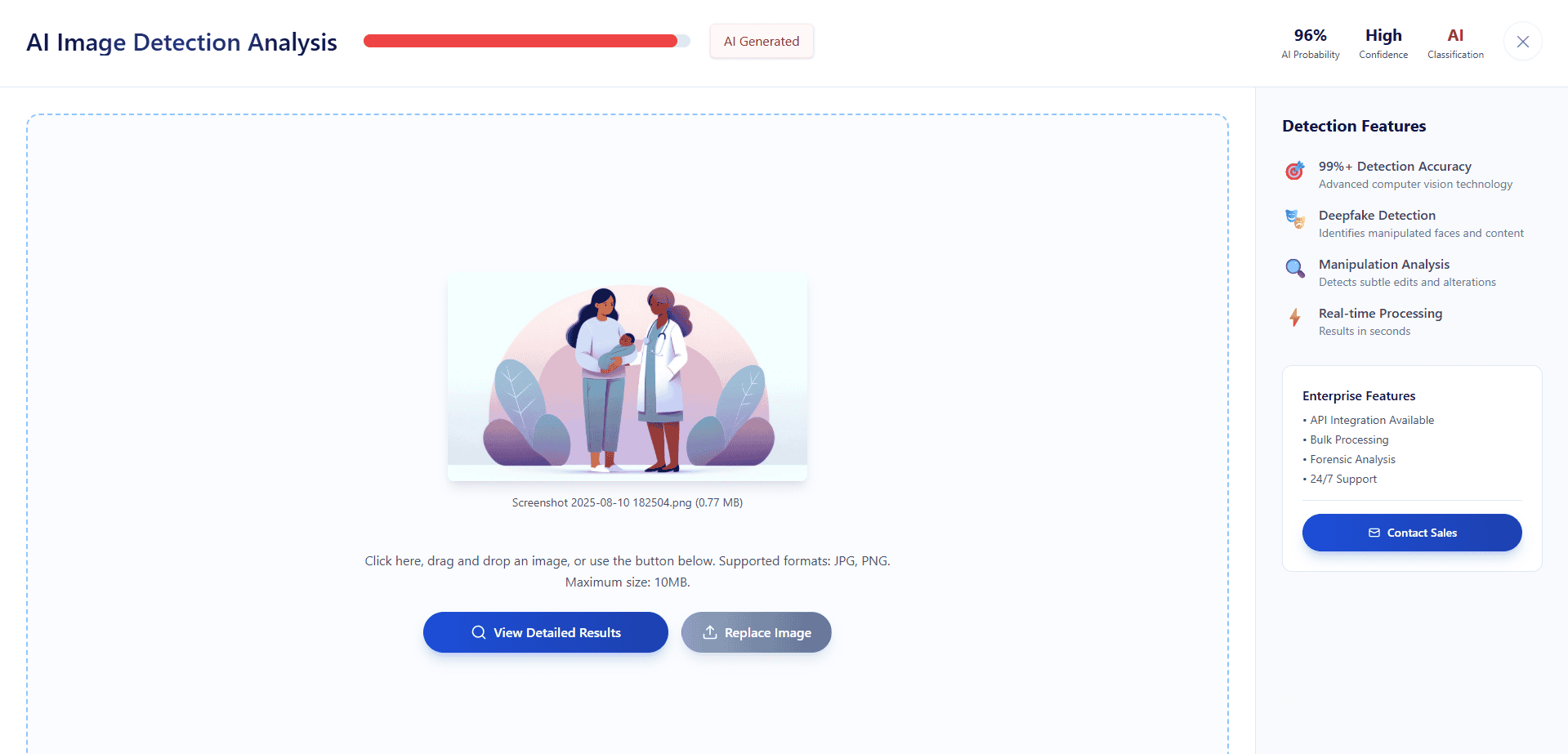

Test #6

Truthscan: Correctly classified image as AI-generated.

AI Likelihood Score: 96%

Test #7

Truthscan: Correctly classified image as AI-generated.

AI Likelihood Score: 98%

Average Score

Test Number | Truthscan |

#1 | 97% |

#2 | 93% |

#3 | 98% |

#4 | 97% |

#5 | 98% |

#6 | 96% |

#7 | 98% |

Score | 96.71% |

The Bottom Line

DALL-E may not be the powerhouse it once was, but it left its mark on the AI image space. It showed people what was possible, and in doing so, it helped push AI detectors to level up.

TruthScan’s 96.71% detection rate against DALL-E makes one thing clear — even with older models, it’s not missing much. It can flag DALL-E’s work with a level of certainty that would have been impressive back when the model first launched, and it’s still impressive now.

The matchup might feel a little uneven today (newer image models are far tougher tests), but that doesn’t make it irrelevant. There’s still a flood of DALL-E-made content floating online, and being able to catch it quickly keeps misinformation from slipping through the cracks.

If you want to create? DALL-E’s still a fun sandbox. If you need to verify? TruthScan’s accuracy shows it’s more than up for the job — even when the images come from a model that helped start it all.

Want to Learn Even More?

If you enjoyed this article, subscribe to our free newsletter where we share tips & tricks on how to use tech & AI to grow and optimize your business, career, and life.